Is The Paris Agreement on Shaky Legs? How to Ensure Successful Implementation

What is needed to make the Paris Agreement a success? This blog post focuses on one of the most central but underappreciated elements – the periodic reviews of progress. States must of course make ambitious and credible contributions in the first place. But if there is no system to ensure that they are monitored and evaluated, the agreement will have very shaky legs.

Article 13 of the Agreement states that the Transparency Mechanism should "provide a clear understanding of climate change action… including clarity and tracking of progress towards achieving Parties' individual nationally determined contributions… including good practices, priorities, needs and gaps" (p. 27).

Almost all countries have already put forward lists of policies to fulfil their commitments. But how will we know whether all of this will add up to limiting global warming to ‘well below' two degrees Celsius? Doing this will require concerted efforts in monitoring and evaluating climate action – but how may we best organize these activities across the globe?

Our brand-new paper, published in Evaluation, addresses this very question on how to organize monitoring and evaluation. This issue matters because the European Union and others are already investing significant amounts of resources in climate policy monitoring and evaluation activities, and many different kinds of actors, such as the European Environment Agency, the European Commission, the Court of Auditors and others have become actively involved. Earlier research has already highlighted important shortcomings in climate policy monitoring and evaluation activities. Our paper argues that the organization of these activities is another area that has received far too little attention.

In the paper we argue that there are, in principle, two different axes to think about governing or organizing monitoring and evaluation activities. The first is a distinction between formal, government-driven or funded evaluation and informal, society-driven evaluation. Earlier evaluation scholars have argued that evaluation driven, funded or even conducted by governments may benefit from more detailed knowledge of the policy process, potentially higher levels of resources to conduct evaluations, and perhaps also greater uptake of the findings.

By the same token, governmental actors may be under pressure to ‘look good' and thus shy away from critically evaluating one's own policies, particularly the ones that may not be working as envisaged. Thus, more informal, societal actors (such as NGOs, foundations, trade unions and others) may be able to step in and provide a more critical eye, provided that they have the interest and resources to do so.

The second way to view the governance of evaluation runs along centralized and de-centralized approaches. On one hand, evaluation may be organized in a centralized way with clear standards that evaluators use everywhere, and where essentially one evaluation actor (governmental or non-governmental) organizes and potentially funds/conducts evaluation. In other words, resources are pooled in order to conduct evaluation tasks.

An advantage of this approach may be higher levels of comparability given more uniform evaluation standards and potentially higher level of resources. On the other hand, scholars from the polycentric governance tradition[1] would argue that such centralized evaluation systems may be prone to failures (what if one picks the wrong or incomplete standards?) and is often insensitive to the vital contextual factors from which policies emerge and contribute significantly to success or failure. Thus, another way of organizing evaluation may be in a much more de-centralized way with many different kinds of actors involved in evaluation.

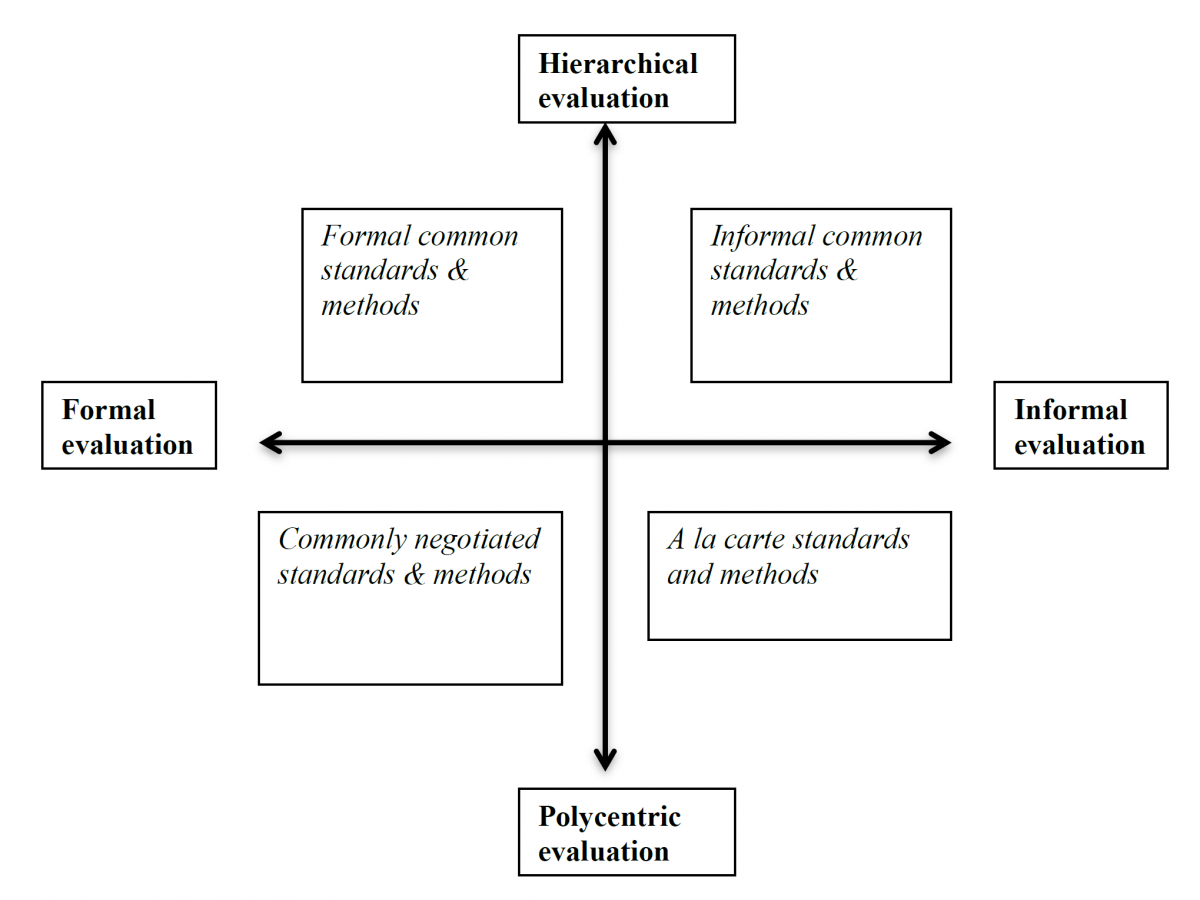

Taking these two axes, we created a new typology in our paper that considers them both at the same time in Figure 1. Doing so opens up new combinations and thus ways to think about governing evaluation. We then drew on existing evidence from climate policy monitoring and evaluation in the European Union to assess to what extent we can detect empirical patterns that chime with our typology.

Figure 1 – Source: Schoenefeld & Jordan, 2017.

The European Union has been a long-standing leader in efforts to monitor and evaluate climate policies, and there are indeed many ongoing efforts, and certainly challenges, in monitoring climate policies. Looking at these activities from the perspective of our typology shows that we can indeed detect patterns that relate to the four quadrants in the typology in the context of the European Union. The new typology is thus a useful way to comprehend evaluation activities, and we hope that it will open up ways of governing them.

For example, the Monitoring Mechanism for climate policies and measures operated by the European Environment Agency, contains negotiated standards and methods, but once these are set, operates in a fairly hierarchical way. Both the European Commission (formal) and more informal evaluation organizations have endeavoured to create evaluation standards, which could be extended to the climate domain. Last, a meta-analysis[2] of climate policy evaluation in the EU has revealed a vast number of studies that used a range of different standards and methods (à la carte). As states are currently hammering out the details of transparency in the Paris Agreement, they should keep these different options and trade-offs in mind.

What then is necessary in order to implement the Paris Agreement? We argue that part of the answer to this question lies in considering how to build successful and enduring systems to monitor and evaluate ongoing climate policy efforts, and that it crucially matters how to organize these activities. Our paper reveals that there are range of organizational choices and options in the climate policy monitoring and evaluation domain and that different options have implications for the practice and results of evaluation. It is worth thinking carefully about how and who organizes the review processes, in order to generate systems that capture the full extent of knowledge and understanding on our climate policies.

The blog post originally appeared on Environmental Europe.